¶ NPU programming

The RK3588 comes with a 6T NPU on top.Currently, RK's NPU adopts a self-developed architecture and only supports the use of non-open source drivers and libraries to operate.

RK's NPU sdk is divided into two parts, the PC side uses rknn-toolkit2, which can be used on the PC side of the model conversion, inference and performance evaluation. Specifically, it converts mainstream models such as Caffe, TensorFlow, TensorFlow Lite, ONNX, DarkNet, PyTorch, etc. to RKNN models, and can use this RKNN model on the PC side to perform inference simulation, computation time and memory overhead. There is another part on the board side, the rknn runtime environment, which contains a set of C API libraries as well as driver modules for communicating with the NPU, executable programs, and so on.

This article describes how to use rknn's npu sdk on the Android board side.

¶ Related Resources Download Links

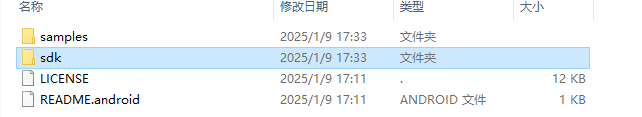

The contents of rknn-toolkit2 after downloading and unpacking are shown below, the relative paths mentioned later in this article are all relative to this directory

The NDK can be used to cross-compile RKNN applications after downloading and unpacking.

¶ About the model

Regardless of whether it is under android or Linux, the method of converting commonly used models, such as ONNX TFLite, etc. to RKNN models is the same, it is to use the rknn-toolkit2 tool to do the conversion under the linux environment on the PC side, and readers who want to generate the models by themselves can refer to these two wiki articles.

In this paper, we directly use the SDK to provide converted models, which are located under each demo in rknpu2/examples/. For example, if we use the mobilenet model in this paper, the converted model is rknpu2/examples/rknn_mobilenet_demo/model/RK3588/ mobilenet_v1.rknn

¶ Board-side program deployment

The kernel comes with an NPU driver by default, which can be confirmed by the following command

rk3588_u:/ $ cat /sys/kernel/debug/rknpu/version

RKNPU driver: v0.9.8

Verify the version of the rknn dynamic library on the board

rk3588_u:/ $ strings /vendor/lib64/librknnrt.so | grep -i "librknnrt version"

librknnrt version: 2.3.0 (c949ad889d@2024-11-07T11:34:26)

If you need a newer version, you can update this librknnrt.so as follows. Execute the following command in an adb terminal on your PC

adb root

adb remount

adb push rknpu2/runtime/Android/librknn_api/arm64-v8a/librknnrt.so /vendor/lib64

adb reboot

¶ Cross-compile the RKNN executable program

Go to the rknn_mobilenet_demo directory and execute the following commands

export ANDROID_NDK_PATH={NDK PATH}

./build-android.sh -t rk3588 -a arm64-v8a -b Debug

After execution, a directory install/rknn_mobilenet_demo_Android is created under this directory, which contains the executable program, the model, and the sample diagrams.

¶ Execute on board

Push the install/rknn_mobilenet_demo_Android directory to the board via adb or something like that.

Then execute the following command

LD_LIBRARY_PATH=/vendor/lib64 ./rknn_mobilenet_demo ./model/RK3588/mobilenet_v1.rknn ./model/dog_224x224.jpg

model input num: 1, output num: 1

input tensors:

index=0, name=input, n_dims=4, dims=[1, 224, 224, 3], n_elems=150528, size=150528, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007812

output tensors:

index=0, name=MobilenetV1/Predictions/Reshape_1, n_dims=2, dims=[1, 1001, 0, 0], n_elems=1001, size=2002, fmt=UNDEFINED, type=FP16, qnt_type=AFFINE, zp=0, scale=1.000000

rknn_run

--- Top5 ---

156: 0.884766

155: 0.054016

205: 0.003677

284: 0.002974

285: 0.000189

LD_LIBRARY_PATH=/vendor/lib64 ./rknn_mobilenet_demo ./model/RK3588/mobilenet_v1.rknn ./model/cat_224x224.jpg

model input num: 1, output num: 1

input tensors:

index=0, name=input, n_dims=4, dims=[1, 224, 224, 3], n_elems=150528, size=150528, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007812

output tensors:

index=0, name=MobilenetV1/Predictions/Reshape_1, n_dims=2, dims=[1, 1001, 0, 0], n_elems=1001, size=2002, fmt=UNDEFINED, type=FP16, qnt_type=AFFINE, zp=0, scale=1.000000

rknn_run

--- Top5 ---

283: 0.407227

282: 0.172485

286: 0.155762

278: 0.059113

279: 0.042603

¶ APK calling NPU

The basic idea of realizing apk call npu is to change this executable program to a function of jni interface based on the previous section, and then call it via java.

In addition, rknn_mobilenet_demo source code uses the interface of opencv to scale and decode the image, java can use Bitmap to replace it.

The implementation is as follows. Based on the app created in the first chapter of the tutorial, add a button to start the NPU demo.

MainActivity onCreate add

button9.setText("npu test");

button9.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

byte[] inputData = ImageDecode.processImageToRGBData("/sdcard/test.jpg");

if (inputData != null) {

RKNNTest(inputData,"/sdcard/mobilenet_v1.rknn");

}

}

});

One of the ImageDecode class is based on the Bitmap implementation of the picture decoding scaling, the implementation is as follows

package com.example.testdemo;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.util.Log;

public class ImageDecode {

static String TAG = "com.example.testdemo";

private static final int MODEL_IN_WIDTH = 224;

private static final int MODEL_IN_HEIGHT = 224;

public static byte[] processImageToRGBData(String imgPath) {

Bitmap origBitmap = BitmapFactory.decodeFile(imgPath);

if (origBitmap == null) {

Log.e(TAG, "Failed to load image: " + imgPath);

return null;

}

Bitmap scaledBitmap = origBitmap;

if (origBitmap.getWidth() != MODEL_IN_WIDTH || origBitmap.getHeight() != MODEL_IN_HEIGHT) {

Log.i(TAG, "Resizing from " + origBitmap.getWidth() + "x" + origBitmap.getHeight() +

" to " + MODEL_IN_WIDTH + "x" + MODEL_IN_HEIGHT);

scaledBitmap = Bitmap.createScaledBitmap(

origBitmap, MODEL_IN_WIDTH, MODEL_IN_HEIGHT, true);

origBitmap.recycle();

}

byte[] rgbData = extractRGBFromARGB(scaledBitmap);

scaledBitmap.recycle();

return rgbData;

}

private static byte[] extractRGBFromARGB(Bitmap bitmap) {

int width = bitmap.getWidth();

int height = bitmap.getHeight();

int[] pixels = new int[width * height];

bitmap.getPixels(pixels, 0, width, 0, 0, width, height);

byte[] rgbData = new byte[width * height * 3];

int index = 0;

for (int i = 0; i < pixels.length; i++) {

int pixel = pixels[i];

rgbData[i * 3] = (byte) ((pixel >> 16) & 0xFF); // R

rgbData[i * 3 + 1] = (byte) ((pixel >> 8) & 0xFF); // G

rgbData[i * 3 + 2] = (byte) (pixel & 0xFF); // B

}

return rgbData;

}

}

Note that the NPU needs data for the original RGB888 format data stream, so the above code directly returns the byte[] type array, used to save this data stream, the next is the original rknn_mobilenet_demo source code wrapped in JNI format, the implementation of the following

#include "rknn_api.h"

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <sys/time.h>

#include <fstream>

#include <iostream>

#include <jni.h>

#include <cstring>

#include <android/log.h>

#include <dlfcn.h>

#define TAG "RKNN_TEST"

#define LOGD(...) __android_log_print(ANDROID_LOG_DEBUG, TAG, __VA_ARGS__)

#define LOGI(...) __android_log_print(ANDROID_LOG_INFO, TAG, __VA_ARGS__)

#define LOGE(...) __android_log_print(ANDROID_LOG_ERROR, TAG, __VA_ARGS__)

using namespace std;

int is_load_rknn_api = 0;

typedef int (*rknn_query_func)(rknn_context, rknn_query_cmd, void*, uint32_t);

typedef int (*rknn_init_func)(rknn_context* context, void* model, uint32_t size, uint32_t flag, rknn_init_extend* extend);

typedef int (*rknn_inputs_set_func)(rknn_context context, uint32_t n_inputs, rknn_input inputs[]);

typedef int (*rknn_run_func)(rknn_context context, rknn_run_extend* extend);

typedef int (*rknn_outputs_get_func)(rknn_context context, uint32_t n_outputs, rknn_output outputs[], rknn_output_extend* extend);

typedef int (*rknn_outputs_release_func)(rknn_context context, uint32_t n_ouputs, rknn_output outputs[]);

typedef int (*rknn_destroy_func)(rknn_context context);

rknn_query_func rknn_query;

rknn_init_func rknn_init;

rknn_inputs_set_func rknn_inputs_set;

rknn_run_func rknn_run;

rknn_outputs_get_func rknn_outputs_get;

rknn_outputs_release_func rknn_outputs_release;

rknn_destroy_func rknn_destroy;

void load_rknn_api(void)

{

void* handle;

char* error;

handle = dlopen("/data/librknnrt.so", RTLD_LAZY);

if (!handle) {

return;

}

dlerror();

rknn_query = (rknn_query_func)dlsym(handle, "rknn_query");

if ((error = dlerror()) != NULL) {

dlclose(handle);

return;

}

rknn_init = (rknn_init_func)dlsym(handle, "rknn_init");

if ((error = dlerror()) != NULL) {

dlclose(handle);

return;

}

rknn_inputs_set = (rknn_inputs_set_func)dlsym(handle, "rknn_inputs_set");

if ((error = dlerror()) != NULL) {

dlclose(handle);

return;

}

rknn_run = (rknn_run_func)dlsym(handle, "rknn_run");

if ((error = dlerror()) != NULL) {

dlclose(handle);

return;

}

rknn_outputs_get = (rknn_outputs_get_func)dlsym(handle, "rknn_outputs_get");

if ((error = dlerror()) != NULL) {

dlclose(handle);

return;

}

rknn_outputs_release = (rknn_outputs_release_func)dlsym(handle, "rknn_outputs_release");

if ((error = dlerror()) != NULL) {

dlclose(handle);

return;

}

rknn_destroy = (rknn_destroy_func)dlsym(handle, "rknn_destroy");

if ((error = dlerror()) != NULL) {

dlclose(handle);

return;

}

is_load_rknn_api = 1;

return;

}

/*-------------------------------------------

Functions

-------------------------------------------*/

static void dump_tensor_attr(rknn_tensor_attr *attr) {

LOGI(" index=%d, name=%s, n_dims=%d, dims=[%d, %d, %d, %d], n_elems=%d, size=%d, fmt=%s, type=%s, qnt_type=%s, "

"zp=%d, scale=%f\n",

attr->index, attr->name, attr->n_dims, attr->dims[0], attr->dims[1], attr->dims[2],

attr->dims[3],

attr->n_elems, attr->size, get_format_string(attr->fmt), get_type_string(attr->type),

get_qnt_type_string(attr->qnt_type), attr->zp, attr->scale);

}

static unsigned char *load_model(const char *filename, int *model_size) {

FILE *fp = fopen(filename, "rb");

if (fp == nullptr) {

LOGE("fopen %s fail!\n", filename);

return NULL;

}

fseek(fp, 0, SEEK_END);

int model_len = ftell(fp);

unsigned char *model = (unsigned char *) malloc(model_len);

fseek(fp, 0, SEEK_SET);

if (model_len != fread(model, 1, model_len, fp)) {

LOGE("fread %s fail!\n", filename);

free(model);

return NULL;

}

*model_size = model_len;

if (fp) {

fclose(fp);

}

return model;

}

static int rknn_GetTop(float *pfProb, float *pfMaxProb, uint32_t *pMaxClass, uint32_t outputCount,

uint32_t topNum) {

uint32_t i, j;

#define MAX_TOP_NUM 20

if (topNum > MAX_TOP_NUM)

return 0;

memset(pfMaxProb, 0, sizeof(float) * topNum);

memset(pMaxClass, 0xff, sizeof(float) * topNum);

for (j = 0; j < topNum; j++) {

for (i = 0; i < outputCount; i++) {

if ((i == *(pMaxClass + 0)) || (i == *(pMaxClass + 1)) || (i == *(pMaxClass + 2)) ||

(i == *(pMaxClass + 3)) ||

(i == *(pMaxClass + 4))) {

continue;

}

if (pfProb[i] > *(pfMaxProb + j)) {

*(pfMaxProb + j) = pfProb[i];

*(pMaxClass + j) = i;

}

}

}

return 1;

}

/*-------------------------------------------

Main Function

-------------------------------------------*/

extern "C" JNIEXPORT int JNICALL

Java_com_example_testdemo_MainActivity_RKNNTest(JNIEnv *env, jobject thiz, jbyteArray inputData,

jstring jmodel_path) {

const int MODEL_IN_WIDTH = 224;

const int MODEL_IN_HEIGHT = 224;

const int MODEL_IN_CHANNELS = 3;

rknn_context ctx = 0;

int ret;

int model_len = 0;

unsigned char *model = NULL;

const char *model_path = env->GetStringUTFChars(jmodel_path, nullptr);

jbyte* jniData = env->GetByteArrayElements(inputData, nullptr);

int dataLength = env->GetArrayLength(inputData);

if(is_load_rknn_api == 0) {

load_rknn_api();

if(is_load_rknn_api == 0) {

LOGE("load_rknn_api fail!\n");

return -1;

}

LOGI("load_rknn_api success!\n");

}

// Load RKNN Model

LOGI("model_path is %s!",model_path);

model = load_model(model_path, &model_len);

LOGI("model is %p, len %d !",model,model_len);

ret = rknn_init(&ctx, model, model_len, 0, NULL);

if (ret < 0) {

LOGE("rknn_init fail! ret=%d\n", ret);

return -1;

}

LOGI("rknn_init success!\n");

// Get Model Input Output Info

rknn_input_output_num io_num;

ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));

if (ret != RKNN_SUCC) {

LOGE("rknn_query fail! ret=%d\n", ret);

return -1;

}

LOGI("model input num: %d, output num: %d\n", io_num.n_input, io_num.n_output);

LOGI("input tensors:\n");

rknn_tensor_attr input_attrs[io_num.n_input];

memset(input_attrs, 0, sizeof(input_attrs));

for (int i = 0; i < io_num.n_input; i++) {

input_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_INPUT_ATTR, &(input_attrs[i]), sizeof(rknn_tensor_attr));

if (ret != RKNN_SUCC) {

LOGE("rknn_query fail! ret=%d\n", ret);

return -1;

}

dump_tensor_attr(&(input_attrs[i]));

}

LOGI("output tensors:\n");

rknn_tensor_attr output_attrs[io_num.n_output];

memset(output_attrs, 0, sizeof(output_attrs));

for (int i = 0; i < io_num.n_output; i++) {

output_attrs[i].index = i;

ret = rknn_query(ctx, RKNN_QUERY_OUTPUT_ATTR, &(output_attrs[i]), sizeof(rknn_tensor_attr));

if (ret != RKNN_SUCC) {

LOGE("rknn_query fail! ret=%d\n", ret);

return -1;

}

dump_tensor_attr(&(output_attrs[i]));

}

// Set Input Data

rknn_input inputs[1];

memset(inputs, 0, sizeof(inputs));

inputs[0].index = 0;

inputs[0].type = RKNN_TENSOR_UINT8;

// inputs[0].size = img.cols * img.rows * img.channels() * sizeof(uint8_t);

inputs[0].size = dataLength;

inputs[0].fmt = RKNN_TENSOR_NHWC;

// inputs[0].buf = img.data;

inputs[0].buf = (void*)jniData;

ret = rknn_inputs_set(ctx, io_num.n_input, inputs);

if (ret < 0) {

LOGE("rknn_input_set fail! ret=%d\n", ret);

return -1;

}

// Run

LOGI("rknn_run\n");

ret = rknn_run(ctx, nullptr);

if (ret < 0) {

LOGE("rknn_run fail! ret=%d\n", ret);

return -1;

}

// Get Output

rknn_output outputs[1];

memset(outputs, 0, sizeof(outputs));

outputs[0].want_float = 1;

ret = rknn_outputs_get(ctx, 1, outputs, NULL);

if (ret < 0) {

LOGE("rknn_outputs_get fail! ret=%d\n", ret);

return -1;

}

// Post Process

for (int i = 0; i < io_num.n_output; i++) {

uint32_t MaxClass[5];

float fMaxProb[5];

float *buffer = (float *) outputs[i].buf;

uint32_t sz = outputs[i].size / 4;

rknn_GetTop(buffer, fMaxProb, MaxClass, sz, 5);

LOGI(" --- Top5 ---\n");

for (int i = 0; i < 5; i++) {

LOGI("%3d: %8.6f\n", MaxClass[i], fMaxProb[i]);

}

}

// Release rknn_outputs

rknn_outputs_release(ctx, 1, outputs);

// Release

if (ctx > 0) {

rknn_destroy(ctx);

}

if (model) {

free(model);

}

return 0;

}

Here uses the dl API to reference the API in /data/librknnrt.so, where librknnrt.so is pushed to the board

where Java_com_example_testdemo_MainActivity_RKNNTest is the JNI interface, which takes two parameters, the first is the RGB format data stream, and the second is the rknn model path

Just call it in Java using RKNNTest.

Then push the dynamic libraries, images and rknn models to the specified directory on the board.

adb push rknpu2\examples\rknn_mobilenet_demo\model\RK3588\mobilenet_v1.rknn /sdcard/mobilenet_v1.rknn

adb push rknpu2\examples\rknn_mobilenet_demo\model\dog_224x224.jpg /sdcard/test.jpg

adb push rknpu2\runtime\Android\librknn_api\arm64-v8a\librknnrt.so /data/librknnrt.so

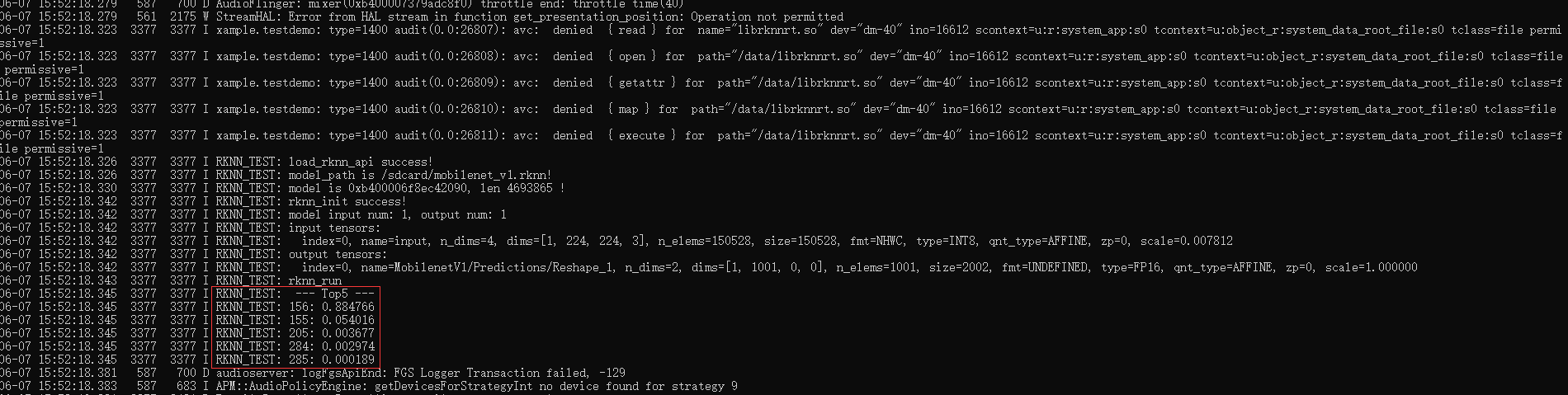

Then install the apk on the board and press the button for NPU TEST, you can see the following message in the logcat