¶ CAMERA Programming

The RK3588 is equipped with a powerful Video Input function. It can support up to

2 MIPI DCPHY + 4 MIPI CSI DPHY(2 lanes), totally support 6 camera inputs.

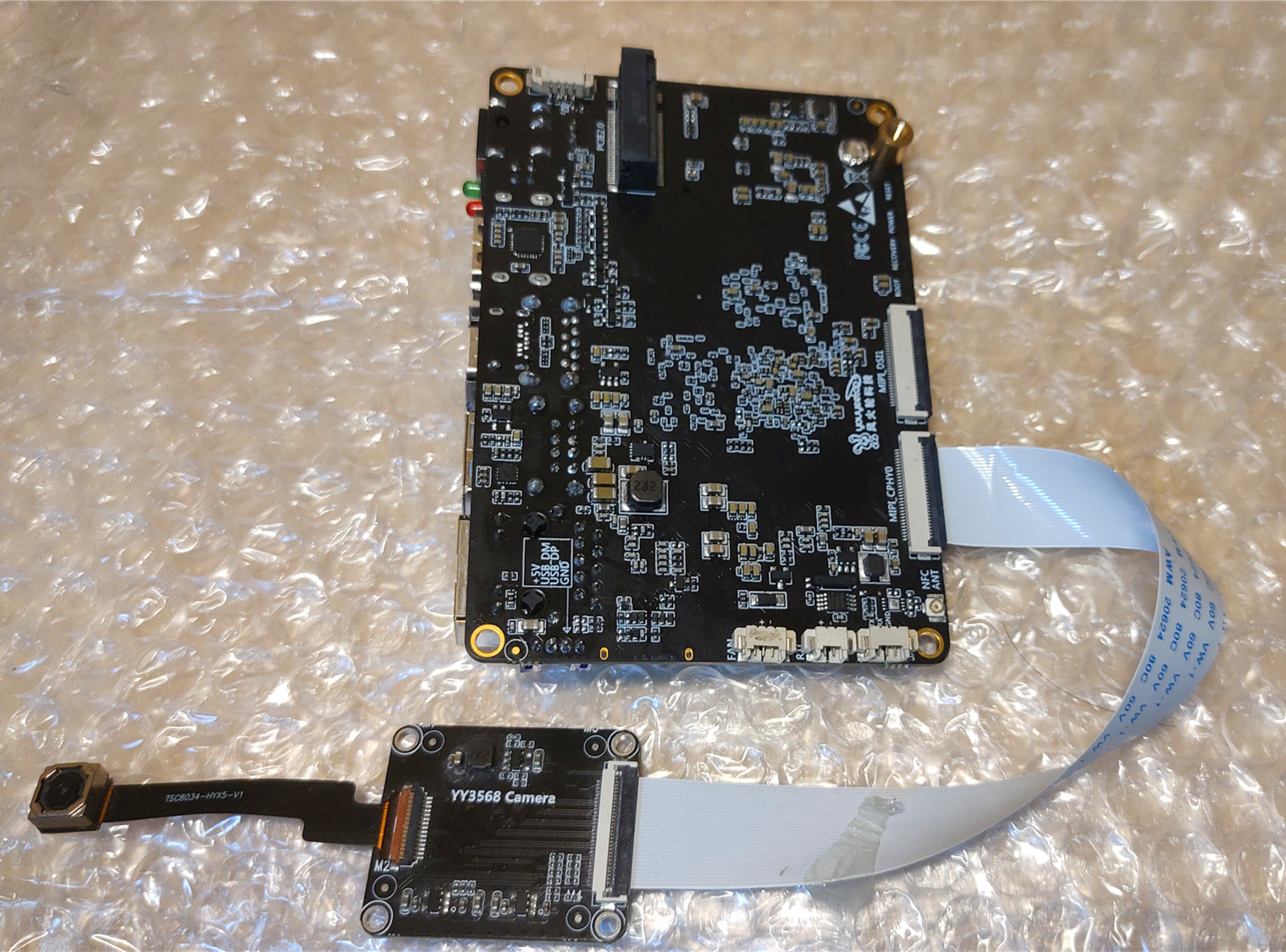

The following is an example of how to program a camera by connecting a GC8034 canmera to MIPI DCPHY 0. The GC8034 supports up to 3264x2448 pixels, and the wiring diagram is as follows

Linux uses the V4L2 framework to operate the camera, and there is a lot to this framework. This article only describes its application programming part.

The node "/dev/video*" requires root privileges to perform read and write operations, using the command line or C language compiled program to operate the camera need root privileges. If you are using ssh or LX terminal, first execute the following command to get root privileges.

sudo su

The isp-related services on the board are not running by default at boot, and the kernel is currently configured to input data from this camera to isp, so run the following command to enable the isp-related services first

/rockchip-test/camera/camera_rkaiq_test.sh

Whether you use the command line or C to operate a camera device, the first thing you need to do is to get its node. under linux, a camera path (a combination of camera/isp/cif) is embodied in the system as a media device.

There are three media devices on the board, /dev/media0 /dev/media1 /dev/media2, and you need to find the corresponding media device. Execute the following command

media-ctl -p -d /dev/media0

media-ctl -p -d /dev/media1

media-ctl -p -d /dev/media2

You can see the corresponding paths for each of the three media devices.

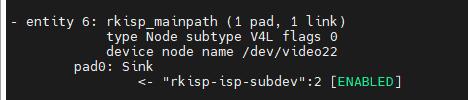

Find the entity corresponding to rkisp_mainpath, which is the node of rkisp.

You can see that /dev/video22 is the node to be operated.

¶ Command line operation of CAMERA

Linux provides the v4l2-ctl command to operate the video node. If you want to capture an image, you can execute the following command to grab the image.

v4l2-ctl -d /dev/video22 --set-fmt-video=width=1632,height=1224,pixelformat=NV12 --stream-mmap --stream-skip=3 --stream-to=/tmp/isp.out --stream-count=10 --stream-poll

--d is the specified node

--set-fmt-video specifies the aspect and format of the image. isp has the ability to shrink. The format can be NV12 or NV16, it has nothing to do with the camera's data format, the camera's original format is usually RGB, the isp converts it to NV12 or NV16.

--stream-mmap Specify the type of buffer as mmap.

--stream-skip Discard the previous frames before start to capture.

--stream-to Specify the output file.

--stream-count Number of frames to capture.

--stream-poll Use poll to capture image.

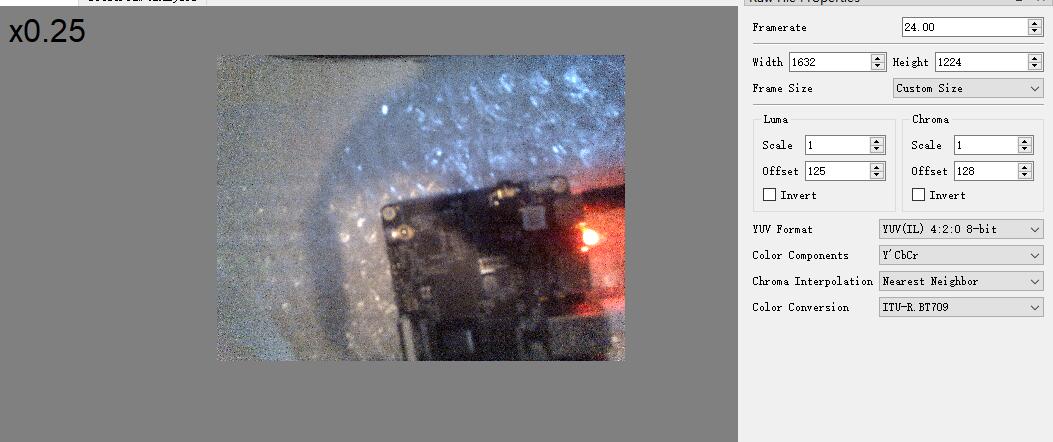

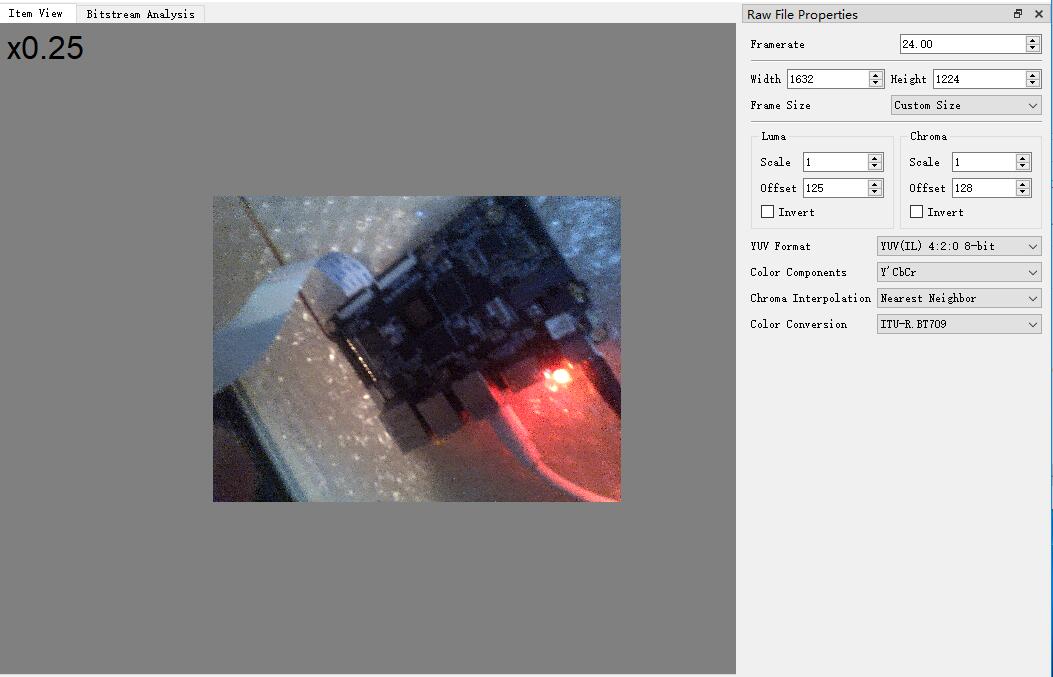

After executing the above commands, the NV12 file will be saved in /tmp/isp.out, and then you can use adb or ssh to put it to PC, and under windows, you can use YUView to view it.

¶ C program of camera

¶ demo introduction

The following demo describes how to grab a frame from a camera and store it in a file

#include <assert.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <getopt.h>

#include <errno.h>

#include <fcntl.h>

#include <malloc.h>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <sys/stat.h>

#include <sys/time.h>

#include <sys/types.h>

#include <unistd.h>

#include <asm/types.h>

#include <linux/videodev2.h>

#define FMT_NUM_PLANES 1

struct frame_buffer {

void *start;

size_t length;

};

int main(int argc, char **argv)

{

int ret = 0;

enum v4l2_buf_type type;

int dev_fd = -1;

struct frame_buffer *buffers = NULL;

int file_fd = -1;

if (argc < 3) {

printf("v4l2_test dev_name save_file\n");

}

char *dev_name = argv[1]; // Camera device name

char *save_file_name = argv[2]; // Store the file name

file_fd = open(save_file_name, O_RDWR | O_CREAT , 0666);

if(file_fd < 0) {

printf("cannot open %s \n",save_file_name);

return -1;

}

dev_fd = open(dev_name, O_RDWR | O_NONBLOCK, 0);

if(file_fd < 0) {

printf("cannot open %s \n",dev_name);

return -1;

}

// Get camera parameters

struct v4l2_capability cap;

memset(&cap, 0, sizeof(cap));

ret = ioctl(dev_fd, VIDIOC_QUERYCAP, &cap);

if (ret < 0)

printf("failture VIDIOC_QUERYCAP\n");

else {

printf(" driver: %s\n", cap.driver);

printf(" card: %s\n", cap.card);

printf(" bus_info: %s\n", cap.bus_info);

printf(" version: %08X\n", cap.version);

printf(" capabilities: %08X\n", cap.capabilities);

}

// Setting the video data format of a video device

struct v4l2_format fmt;

memset(&fmt, 0, sizeof(fmt));

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

fmt.fmt.pix.width = 1632;

fmt.fmt.pix.height = 1224;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_NV12; // V4L2_PIX_FMT_YUYV;//V4L2_PIX_FMT_YVU420;//V4L2_PIX_FMT_YUYV;

// fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

ret = ioctl(dev_fd, VIDIOC_S_FMT, &fmt); // Setting the image format

if (ret < 0)

printf("VIDIOC_S_FMT failed\n");

// Request a kernel buffer for caching a frame

struct v4l2_requestbuffers req;

memset(&req, 0, sizeof(req));

req.count = 1;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

req.memory = V4L2_MEMORY_MMAP;

ret = ioctl(dev_fd, VIDIOC_REQBUFS, &req); // Request buffer, count is the number of requests

if (ret < 0)

printf("VIDIOC_REQBUFS failed\n");

if (req.count < 1)

printf("Insufficient buffer memory\n");

buffers = calloc(req.count, sizeof(*buffers)); // Create corresponding space in memory

struct v4l2_buffer buf;

struct v4l2_plane planes[1];

for (unsigned int i = 0; i < req.count; ++i) {

memset(&buf, 0, sizeof(buf));

memset(&planes, 0, sizeof(planes));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

buf.m.planes = planes;

buf.length = FMT_NUM_PLANES;

if (-1 == ioctl(dev_fd, VIDIOC_QUERYBUF, &buf)) // map userspace

printf("VIDIOC_QUERYBUF failed\n");

buffers[i].length = buf.m.planes[0].length;

buffers[i].start =

mmap(NULL /* start anywhere */, // Mapping relationships via mmap

buf.m.planes[0].length,

PROT_READ | PROT_WRITE /* required */,

MAP_SHARED /* recommended */,

dev_fd, buf.m.planes[0].m.mem_offset);

if (MAP_FAILED == buffers[i].start)

printf("mmap failed\n");

}

for (unsigned int i = 0; i < req.count; ++i) {

memset(&buf, 0, sizeof(buf));

memset(&planes, 0, sizeof(planes));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

buf.m.planes = planes;

buf.length = FMT_NUM_PLANES;

if (-1 == ioctl(dev_fd, VIDIOC_QBUF, &buf)) // Requested buffers go into the queue

printf("VIDIOC_QBUF failed\n");

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

if (-1 == ioctl(dev_fd, VIDIOC_STREAMON, &type)) // Start capturing image data This function corresponds to stream on in the kernel.

printf("VIDIOC_STREAMON failed\n");

// This means that the first few frames are discarded and the last frame is kept in the buffers.

for (unsigned int i = 0; i < 3; ++i)

{

fd_set fds;

struct timeval tv;

int r;

FD_ZERO(&fds);

FD_SET(dev_fd, &fds);

/* Timeout. */

tv.tv_sec = 2;

tv.tv_usec = 0;

r = select(dev_fd + 1, &fds, NULL, NULL, &tv);

if (-1 == r) {

if (EINTR == errno)

continue;

printf("select failed\n");

}

if (0 == r) {

printf("select timeout\n");

exit(EXIT_FAILURE);

}

memset(&buf, 0, sizeof(buf));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

buf.memory = V4L2_MEMORY_MMAP;

buf.m.planes = planes;

buf.length = FMT_NUM_PLANES;

ret = ioctl(dev_fd, VIDIOC_DQBUF, &buf);

if (ret < 0)

printf("VIDIOC_DQBUF failed\n"); // List the frame buffer for acquisition

assert(buf.index < req.count);

printf("buf.index dq is %d,\n", buf.index);

ret = ioctl(dev_fd, VIDIOC_QBUF, &buf);

if (ret < 0)

printf("failture VIDIOC_QBUF\n");

}

write(file_fd, buffers[buf.index].start, fmt.fmt.pix.sizeimage); // Write it to a file

fsync(file_fd);

unmap:

for (unsigned int i = 0; i < req.count; ++i)

if (-1 == munmap(buffers[i].start, buffers[i].length))

printf("munmap failed");

type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

if (-1 == ioctl(dev_fd, VIDIOC_STREAMOFF, &type))

printf("VIDIOC_STREAMOFF failed");

close(dev_fd);

close(file_fd);

exit(EXIT_SUCCESS);

return 0;

}

Save this source code as v4l2_test.c Use the cross-compiler in the SDK to perform the compilation

aarch64-none-linux-gnu-gcc v4l2_test.c -o v4l2_test

Then put this v4l2_test on the board and execute it

./v4l2_test /dev/video22 /tmp/isp.out

Then you can capture a frame and save it to /tmp/isp.out, put this file on your pc and use YUView to view its contents.