¶ Introduction to the use of RK NPU

RK3588s has an NPU with 6T computing power. rk's NPU currently adopts a self-developed architecture and only supports the use of non-open source drivers and libraries to operate.

RK's NPU sdk is divided into two parts, the PC side uses rknn-toolkit2, which can be used for model conversion, inference and performance evaluation on the PC side. Specifically, it converts mainstream models such as Caffe, TensorFlow, TensorFlow Lite, ONNX, DarkNet, PyTorch, etc. to RKNN models and can use this RKNN model for inference simulation, computation time and memory overhead on the PC side. There is another part on the board side, the rknn runtime environment, which contains a set of C API libraries and driver modules to communicate with the NPU, executable programs, etc. This article describes how to use rk's npu sdk.

¶ Related resource download links

¶ Install docker

The following command is executed on an x86 Ubuntu host instead of YY3568

Since rknn-toolkit2 runtime environment has more dependencies, it is recommended to install rknn PC environment directly by docker, the docker provided by rk already contains all the necessary environment.

1、Uninstall the old docker version

apt-get remove docker docker-engine docker.io containerd runc

2、Install the dependencies.

sudo apt-get install apt-transport-https ca-certificates curl gnupg2 software-properties-common

3、Trust docker's gpg public key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

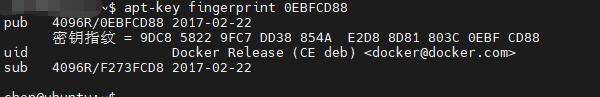

To verify that the public key was added successfully, you can use the following command

apt-key fingerprint 0EBFCD88

- Add the software source and install it

add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

apt-get update

apt-get install docker-ce

To verify if the installation is successful, you can use the following command

docker -v

¶ Download and run rknn docker

The following command is executed on an x86 Ubuntu host instead of YY3568

First, download rknn-toolkit2. The address is

https://github.com/rockchip-linux/rknn-toolkit2

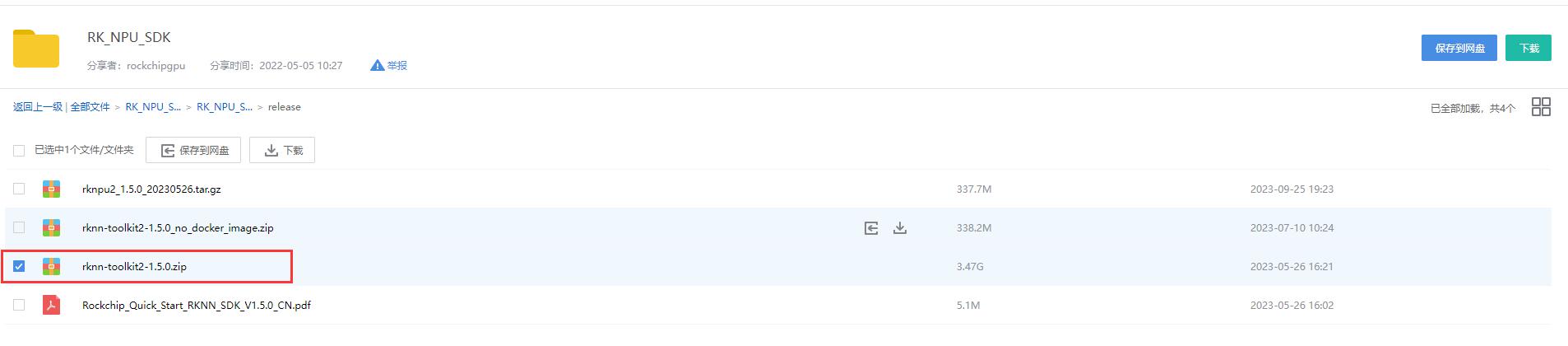

Note that there is no docker in this place, there is a link to RK's Baidu website on this page, you can go to this link to get the docker

Open this link and find

Just download this file.

Then if you want to update rknn-toolkit, you can also find it inside this netdisk. I'm using version 1.3 here, which is the same as the full version of the sdk.

After downloading, open the directory where the docker is located, and execute

sudo docker load --input rknn-toolkit2-1.3.0-cp36-docker.tar.gz

Then execute

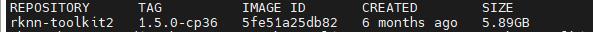

sudo docker images

You can see that this image has been loaded

Then use the command

sudo docker run -t -i --privileged -v /dev/bus/usb:/dev/bus/usb -v $(pwd)/rknn-toolkit2/examples/onnx/yolov5:/rknn_yolov5_demo rknn-toolkit2:1.3.0-cp36 /bin/bash

Where -v is to map the directory into the Docker environment. This is an example in rknn-toolkit2, but other directories can be mapped as well. Also /dev/bus/usb is needed for debugging with adb later. If the board you have has adb service, you can turn it on, if not, you can leave it on

(pwd)/rknn-toolkit2/ in the above command needs to be replaced with the actual directory of the rknn-toolkit2 project, where there is the examples/onnx/yolov5 folder.

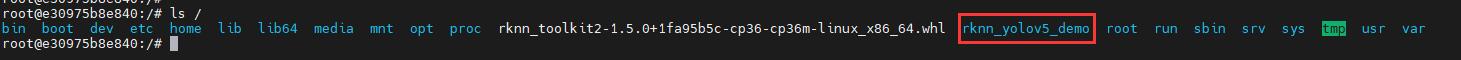

After entering docker, check the files under the root directory with the ls command and you can see that there is indeed a folder rknn_yolov5_demo

¶ Run rknn-toolkit2 to generate the model and reason about it

Go to rknn_yolov5_demo in docker and execute

python3 ./test.py

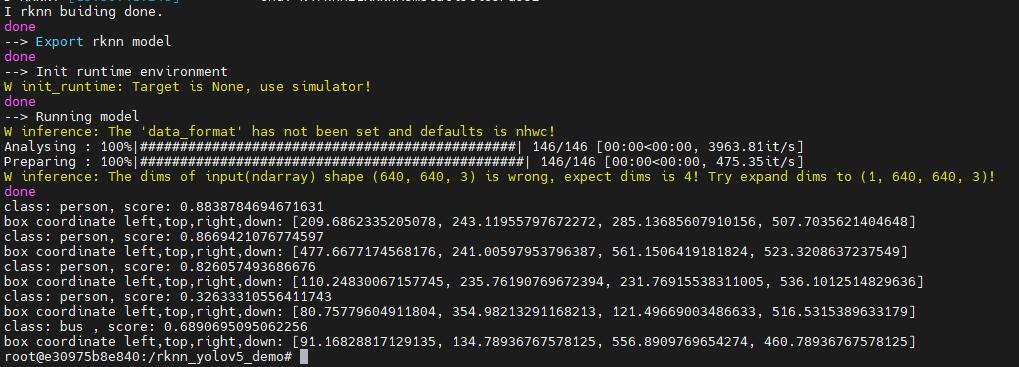

You can get the following result

The converted model is then stored in rknn-toolkit2/examples/onnx/yolov5 on the host, where

yolov5s.rknn is the model that supports the rknn format.

The inference results are saved in result.jpg in this directory, as follows (the original image on the left and the inference results on the right)

¶ Board-side execution

First, open rknn-toolkit2, you need to re-generate the rknn model files suitable for board-side execution. The rknn libraries generated in the way described in the previous section are simulated on a PC. To generate them for the target platform, you need to change test.py.

If there is no adb, then just add the parameter target_platform='rk3588' to rknn.config. If you have adb and need to connect to it for debugging, you need to add parameter to rknn.init_runtime.

Then run python3 again in docker . /test.py to get the yolov5s.rknn file, which is ready to run on the rk3588 device.

Then on top of the host, open the rknpu2 folder, and here you still have to choose the yolov5 demo.

cd rknpu2/examples/rknn_yolov5_demo

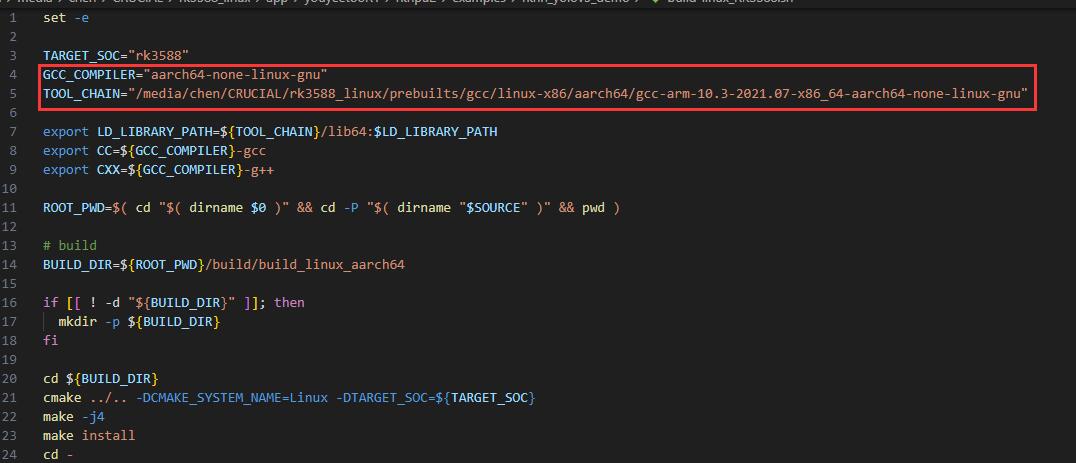

Modify the application build script build-linux_RK3588.sh. The build toolchain is in prebuilts/gcc/linux-x86/aarch64 in the sdk directory. Users need to modify the PATH and TOOL_CHAIN variables according to the actual sdk installation path.

Execute build-linux_RK3588.sh. After that, the following file is generated

Put this executable, the rknn model, and the bus.jpg and coco_80_labels_list.txt under the model folder together under the same path on the board, you can use ssh,adb etc.

Then run

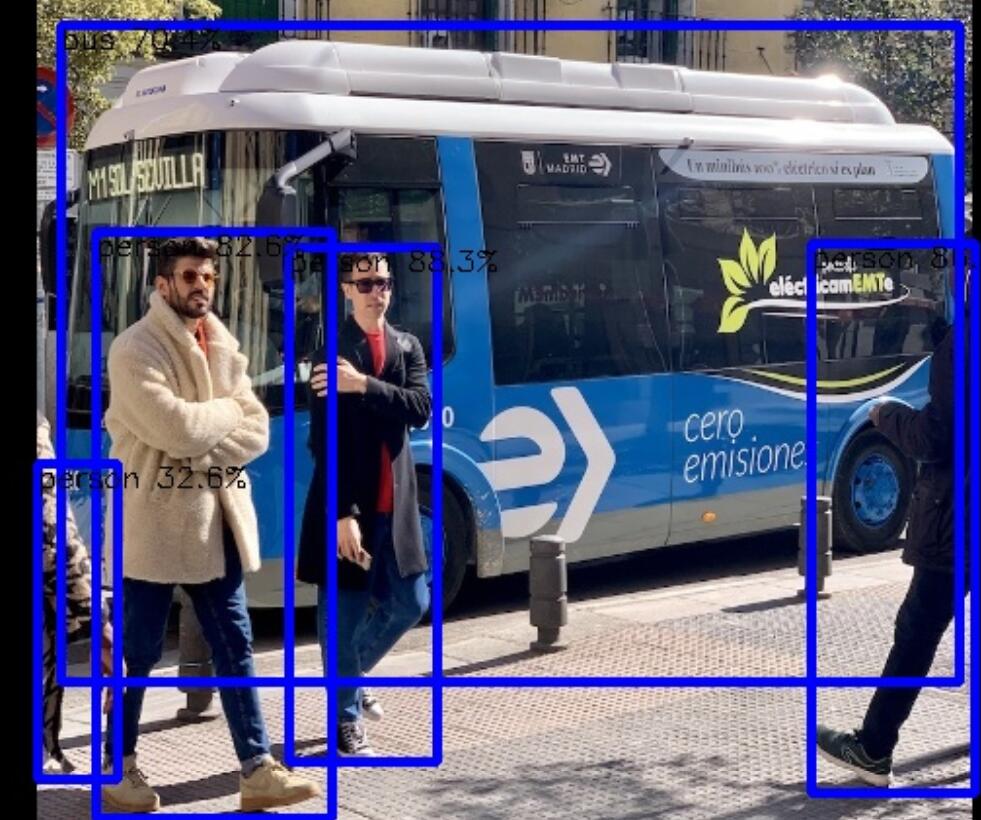

./rknn_yolov5_demo ./model/yolov5s.rknn ./model/bus.jpg

Will generate out.jpg under this directory, view this file

It is basically the same as the PC analog reasoning, with not much difference in confidence level

By this point, the entire process of reasoning from PC to board execution of an rknn demo is complete.